By Aakriti Bansal

Wadia also stressed the role of policy. “If we could create policy around AI use in schools, freeing up teachers from the burden of portion completion etc., then the teachers who are AI literate can be allowed to innovate with regard to teaching-learning,” she said.

However, she acknowledged that broader implementation faces political and structural hurdles. “The union government can only make changes to CBSE and central schools,” she noted. “Many state governments are not on board… So the possibility of enlightened policy coming into all schools in the country is bleak.”

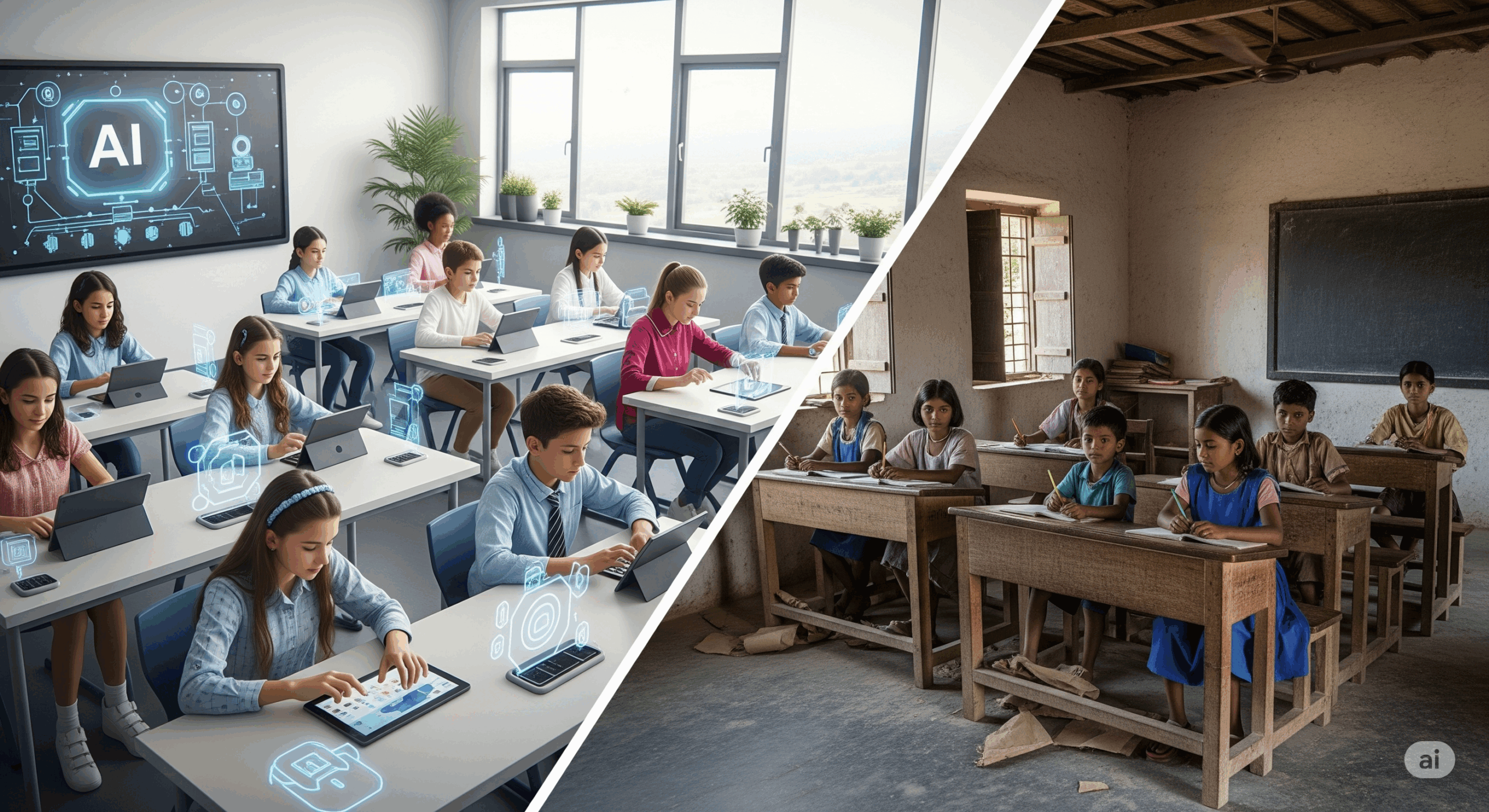

Infrastructure and Access are Still Barriers

As of the 2023–24 UDISE+ report, out of 14.71 lakh schools in India, only 7.48 lakh schools have access to computers for teaching and learning. About 7.92 lakh schools have Internet access, and 8.41 lakh schools report having any form of computer facilities. Government schools fare worse: only 21.4% have desktops, 22.2% have tablets, and just 21.2% have smart classrooms, according to a South China Morning Post report. This digital shortfall poses a major barrier to implementing AI tools that rely on stable connectivity and device access.

Wadia said smartphones in the classroom might be sufficient, and that teachers generally have them. She added that state governments could update rules to support mobile use in teaching. She added that tools like Bhashini are catching up in terms of language support, which could make AI more accessible in diverse classrooms. However she noted that, limited access to devices and stable connectivity remains a challenge. AI tools require a minimum level of infrastructure that many schools haven’t yet achieved.

The Risks of Using AI in Classrooms

Generative AI tools like Gemini can “hallucinate,” or produce incorrect but plausible-sounding information. This poses risks in classrooms where students and teachers may not always detect such errors. A 2023 MIT Sloan EdTech report found that large language models frequently fabricate data or sources. It cited the Mata v. Avianca case, where ChatGPT generated fake legal citations that were submitted in court.

The report also warned that AI-generated content can reinforce gender and racial stereotypes, potentially introducing bias into teaching materials. In a classroom setting, this could lead to students receiving inaccurate or culturally insensitive examples without warning.

While Google says it optimises Gemini for “learning science,” the internal workings of the tool remain opaque. According to Google’s blog, NotebookLM does cite the sources it pulls from, such as uploaded documents or transcripts. However, the tool still functions as a “black box” in that it doesn’t reveal the internal reasoning behind its answers or how it weighs one source over another.

Google’s Workspace for Education terms limit the company’s liability. The agreement states that Google disclaims any warranties regarding content accuracy or uninterrupted use of the services. This effectively places the responsibility for verifying AI-generated content on the teachers and schools using the tools.

Why This Matters

Gemini and NotebookLM are designed for more flexible, personalised learning. But right now, the ground reality in schools doesn’t match that design.

As Wadia put it, “The experience of implementing NEP 2020 so far has been disappointing.” She emphasised the need for teacher training, autonomy, and systemic support before expecting meaningful change from AI adoption.

To close the gap, India must equip teachers with AI literacy, shift pedagogical practices, and build supporting infrastructure. Without those foundational changes, even the most advanced tools will struggle to deliver real educational impact.

At the same time, schools must tread carefully. Generative AI tools can produce factually incorrect or biased outputs, and their internal logic often remains opaque. If teachers rely on these tools without proper checks, there is a real risk of passing on misleading information to students. Until stronger safeguards and greater transparency are in place, human oversight will remain critical.

Google’s Gemini AI for Kids: A Learning Tool or a Safety Gamble for India?

Gemini AI to Replace Google Assistant on Android Auto & WearOS to Improve User Experience

Summary: Consultation paper on framework for Education Ecosystem Registry