By Contributor John Werner

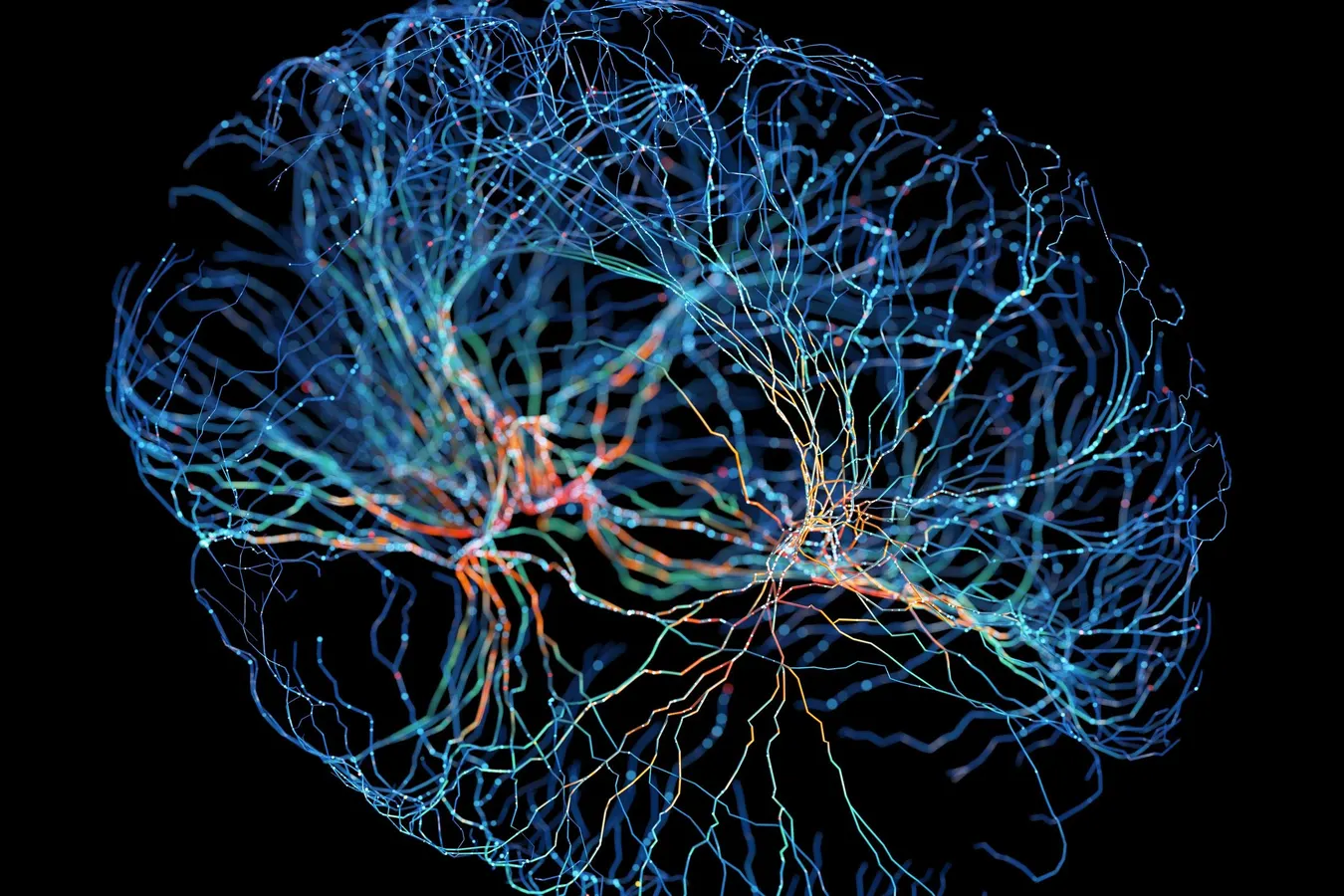

System of neurons with glowing connections on black background

In trying to move forward with the intersection of AI and neuroscience, one of the abiding tasks that teams focus on is to look at the similarities and differences between biological brains that develop naturally, and artificial ones created by scientists.

But now we have this whole other dichotomy between neural networks, which are themselves only really 10 years old or so in terms of evolved systems, and new biological organoid brains developed with biological materials in a laboratory.

If you feel like this is going to be deeply confusing in terms of neurological research, you’re not alone – and there are a lot of unanswered questions around how the brain works, even with these fully developed simulations and models.

Terminology and Methodology with Organoids

A couple of weeks ago, I wrote about the process of growing brain matter in a lab. Not just growing brain matter, but growing a small pear-shaped brain, which scientists call an organoid, that apparently can grow its own eyes.

Observing that sort of strange phenomenon feeds our instinctive tendency to connect vision to intelligence – to explore the relationship between the eye and the brain.

People on both sides of the aisle, in AI research and bioscience, have been looking at this relationship. In developing some of the neural net models that are most promising today, researchers were inspired by the primitive visual capabilities of a roundworm called C elegans, which famously led to the development of some kinds of AI and medical research.

MORE FOR YOU

Back to the production of biological brain-esque organoids, in further research, I found that scientists use stem cells and something called “Matrigel” – and that this developed from a decades-long analysis of tumor material in lab mice. There’s a lot to unpack there, and we’ll probably hear a lot more about this as people realize that these mini-brains are around.

Exploring Vision and Intelligence

One of the tech talks at a recent Imagination in Action event also piqued my interest in this area. It came from Kushagra Tiwary, who talked about exploring “what if” scenarios involving different kinds of evolution.

“One of the first questions that we ask is: what if the goals of vision were different, right? What if vision evolved for completely different things? The second question we’re asking is: We all have lenses in our eyes, our cameras have lenses. What if lenses didn’t evolve? How would we see the world if that didn’t happen? Would we be able to detect food? … Maybe these things wouldn’t happen. And by asking these questions, we can start to investigate why we have the vision that we have today, and, more importantly, why we have the visual intelligence that we have today.”

He had one more question. (Two more questions, really.)

“Our brains also develop at kind of the same pace as our eyes, and one would argue that, you know, we really see with our brains, not with our eyes, right? So what if the computational cost of the brain were much lower?”

He talked about the brain/eye scaling relationship, and key elements of how we process information visually.

Then Tiwary mentioned that this could inform AI research as we build agents in some of the same ways that we humans are built ourselves.

Computer Vision, Robotics, and Industrial Applications

There was another tech talk at the same event that covered collaborative visual intelligence.

Annika Thomas went over some of the characteristics of multi-agent systems in a three-dimensional workspace – their ability to localize and extract objects, and something called “Gaussian splatting” that informs how we think about information processing between the eye and the brain.

The bottom line is that we have all of these highly complex models – we have the neural nets, which are fully digital, and now we have proto-brains growing in a petri dish.

Then we also have these bodies of research that show us things like how the human brain evolved, how it differs from its artificial alternatives, and how we can continue to drive advancements in this field.

Last, but not least, I recently saw that scientists believe we’ll be able to harvest memories from a dead human brain in about 100 years, by 2125.

Why so long?

I asked ChatGPT, and the answer that I got was threefold – first, the process of decomposition makes the job difficult, second, we don’t have full mapping of the human brain, and third, the desired information is stored in delicate frameworks.

In other words, our memories in our brain are not in binary ones and zeros, but in neural structures and synaptic strengths, and those can be hard to measure by any outside party.

It occurs to me, though, that if artificial intelligence itself has this vast ability to perceive small differences and map patterns, this type of capability may not be as far away as we think.

That’s the keyword here: think.

Editorial StandardsReprints & Permissions