By Anjana Susarla Contributor

What the 99-1 Senate vote against a proposed moratorium on AI oversight tells us

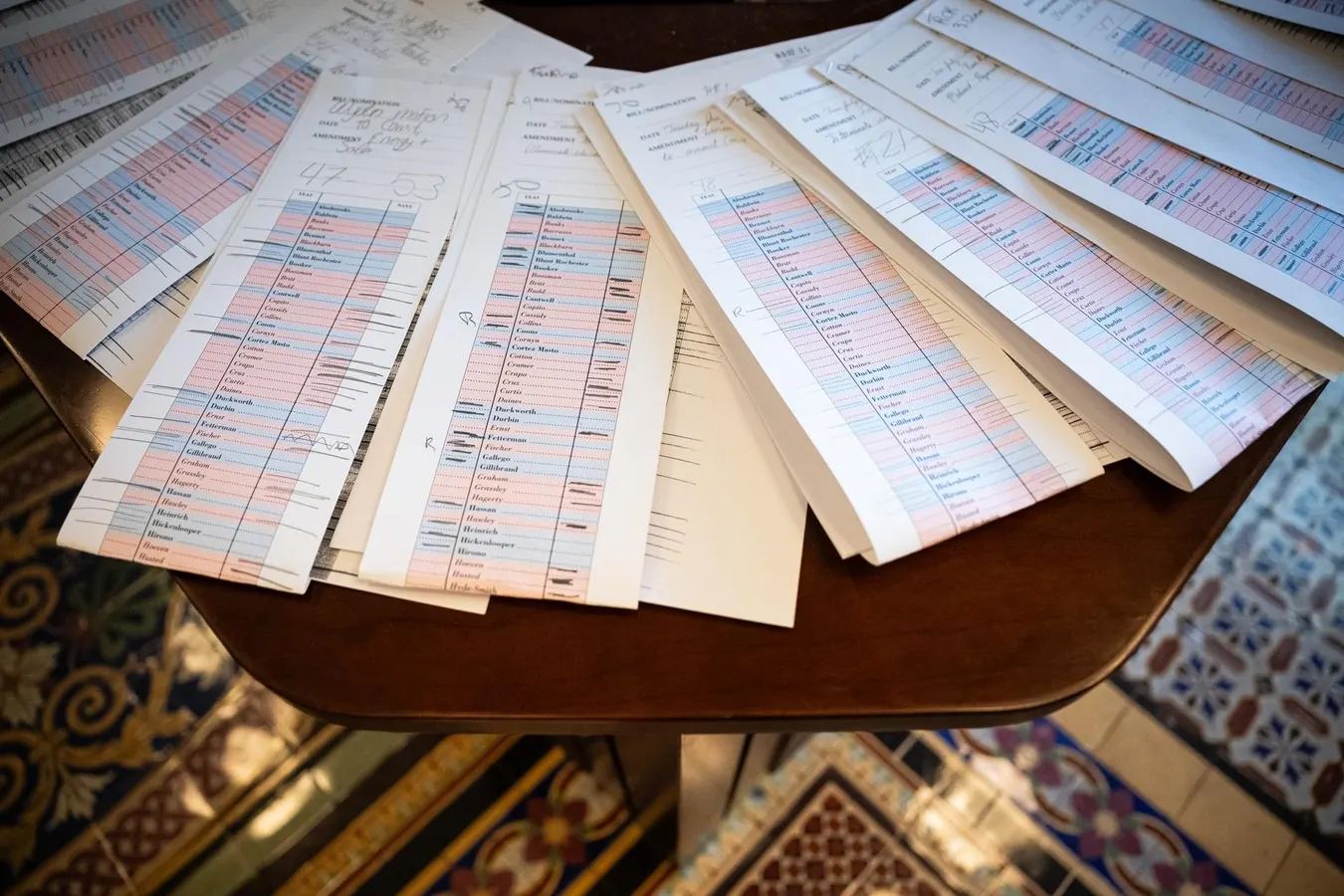

Copies of Senate amendments during a vote-a-rama at the US Capitol in Washington, DC, US, on … More Tuesday, July 1, 2025. Donald Trump’s $3.3 trillion tax and spending cut bill passed the Senate Tuesday after a furious push by Republican leaders to persuade holdouts to back the legislation and hand the president a political win. Photographer: Graeme Sloan/Bloomberg

© 2025 Bloomberg Finance LP

The One Big, Beautiful Bill Act in its initial draft included a 10-year moratorium on state level AI oversight tied to withholding broadband funds to states that regulate AI. The amendment finally failed to pass.

On the face of it, dealing with a patchwork of regulations across states may be confusing for companies. A uniform regulatory infrastructure could also help in easing corporate compliance with governmental policies.

However, the sweeping scope of this bill has far-reaching consequences for consumer privacy protections, use of AI in sensitive sectors such as healthcare etc.

In 2024, at least 40 states in the U.S. have introduced various forms of AI related legislation. In 2025, all 50 states have introduced various AI related legislation. A blanket moratorium on AI legislation would have effectively superseded state level laws and statuettes regarding the use of AI.

Why AI oversight is like the game of Clue

Let me illustrate the problem of AI accountability with an example from the public sector. In the state of Michigan, more than 34,000 individuals were accused of unemployment fraud between 2013 and 2015 when the Michigan Unemployment Insurance Agency deployed a new automated decision making system. The state subsequently reviewed those claims and found that several of those claims were algorithmically generated, with minimal human intervention.

Several states in the US have moved to address such concerns about widespread use of AI in governmental functions through creating new oversight bodies, requiring impact assessments etc. In an example such as the Michigan Unemployment Insurance Agency, such oversight could have been helpful in establishing a criticality threshold for automated decisions. Algorithmic oversight could also help in creating fallback options and correction mechanisms.

MORE FOR YOU

As the above-mentioned example from Michigan highlights, algorithmic oversight was only possible after a slew of complaints over the wrongful determination of fraud. Law Professor Sonia Gipson Rankin argues that Michigan citizens accused of welfare fraud found themselves in a guessing game of who is liable -was it the unemployment agency, technology vendors or management consultants?

AI Oversight Requires a Civic Dialog

AI law and ethics and legal concepts artificial intelligence law and online technology of legal … More regulations Controlling artificial intelligence technology is a risk. Judicial gavel and law icon

AI governance requires a constructive conversation between AI developers, firms, consumer rights activists and governmental entities to recognize emerging risks and build shared resources. Algorithmic auditing also requires credentialing, impact assessments, training and risk mitigation practices. Initiatives such as regulatory sandboxes allow authorities to engage firms to test innovative products or services that challenge existing legal frameworks.

Developers of AI have long been self-aware of the issues of bias, trustworthiness and accountability in AI. Companies such as Microsoft and Google release annual reports on their efforts at responsible AI. Governments across the US can learn from such examples of accountability and transparency with AI.

Statewide laws and regulations should not be viewed solely in terms of hindering progress regarding AI. AI safety requires systematic efforts and a body of knowledge about risks from AI deployment as well as set of shared resources to facilitate AI experimentation. We are witnessing an emerging ecosystem of third-party AI evaluators. In the transportation sectors, the National Highway Traffic Safety Administration (part of the US Department of Transportation) regularly releases compliance test reports for automobile safety. AI safety could operate on similar lines.

Rolling back on statewide laws impedes the progress already made at the state level. While a comprehensive federal framework might be preferable to a patchwork of AI oversight, doing nothing at all is not an option.

Editorial StandardsReprints & Permissions